SimCity is magical. You get to build a world that reacts to your inputs. Create some residential zones, build a road, connect power, and people just show up. Raise taxes too high, they leave. Put industrial buildings next to houses and property values tank and people complain about pollution. If they complain too much, you can send Godzilla or a UFO to attack the city!

Building with AI is similarly magical. It’s amazing to give Claude Code or Codex some inputs and watch it spin something up.

Could this magic be combined?

TL;DR:

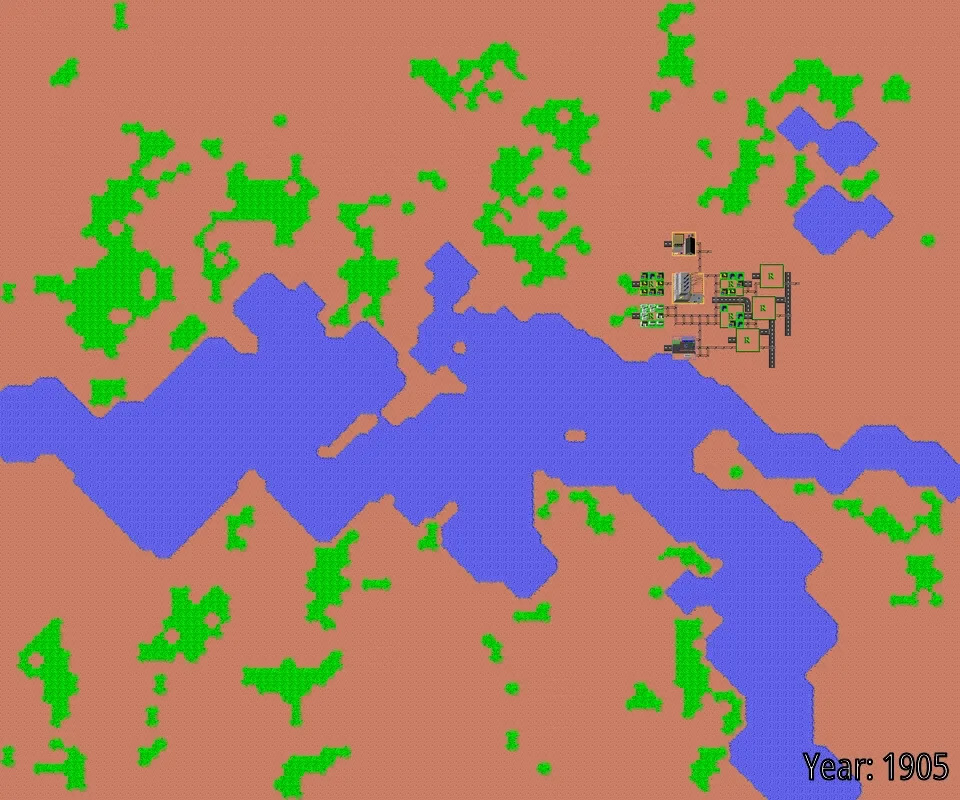

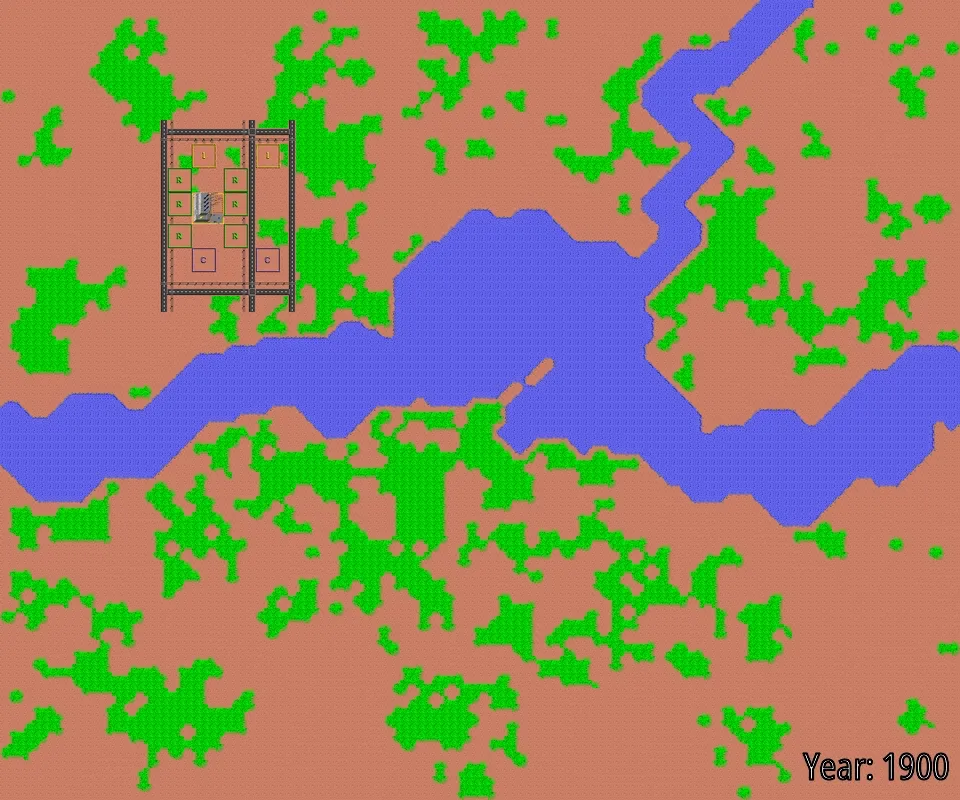

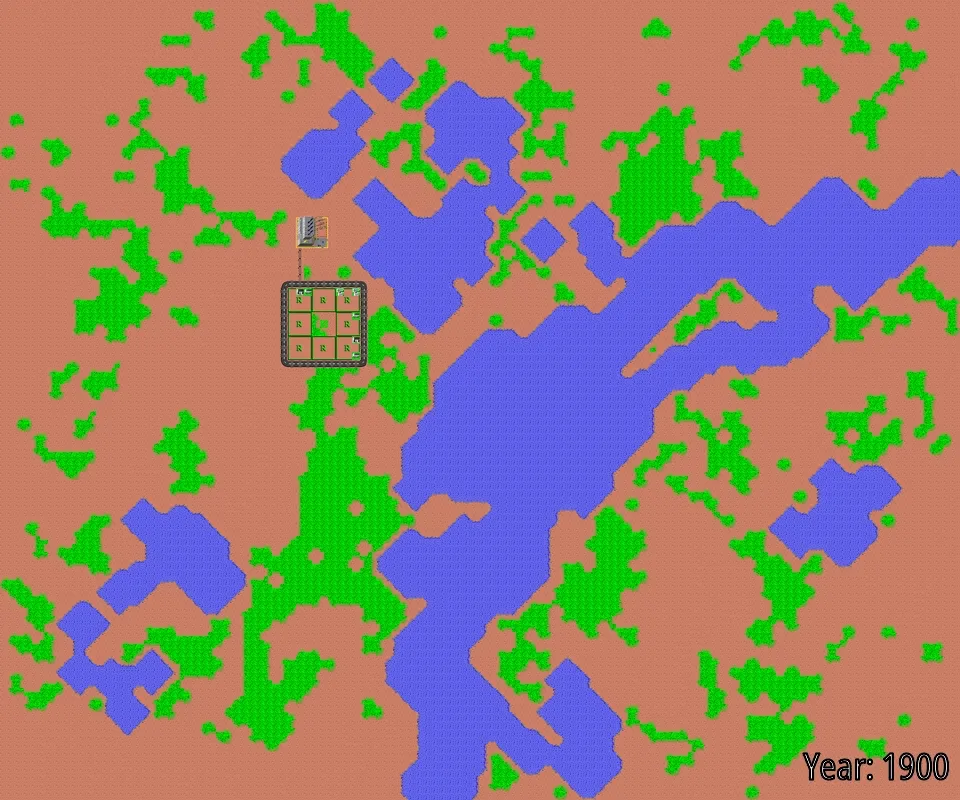

What a mess!

What a mess!

It didn’t go great, but it was a fun project and I did it all from my iPhone.

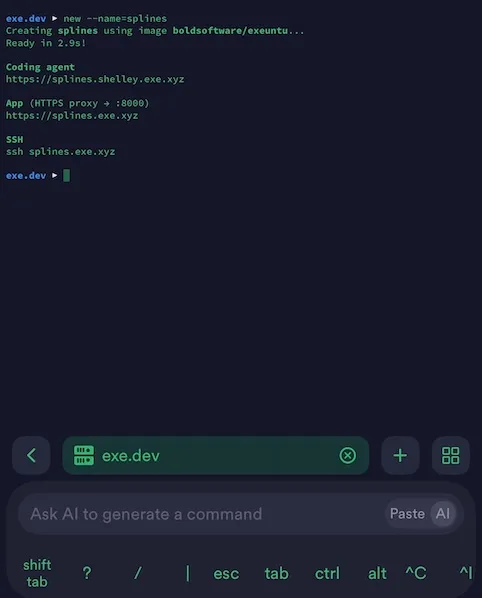

Starting with exe.dev

I recently came across exe.dev, a service that lets you create up to 25 virtual machines. New VMs spin up in seconds and come with both Claude Code and Codex installed. It also comes with Shelley, their web-based coding agent that allows you to use the underlying model of your choosing (Claude, Codex, etc.). It’s a great setup for building prototypes and iterating and, most importantly, everything is mobile accessible, which allows me to quickly spin up coding agents.

I used the Termius iOS app to SSH into exe.dev and launched a new VM.

A fresh VM ready in less than 3 seconds!

A fresh VM ready in less than 3 seconds!

Getting the game running

The first attempt was emulating the SNES version of SimCity. This proved to be very tricky because we had to parse screenshots and simulate button presses. It ended up being more of a fight to actually play the game when I really wanted to test the agent decision making.

I went looking for alternatives and found Micropolis, the open-source release of the original SimCity. Instead of parsing screenshots, Shelley added an HTTP API directly to the game engine. Place a residential zone: POST /api/place {"tool": "RESIDENTIAL", "x": 50, "y": 60}. Get the full game state as JSON: population, funds, zone counts, demand meters. Run the simulation as fast as the CPU allows.

Within hours of the pivot, we had a working city. Population: 320. Not much of a metropolis, but the plumbing worked.

Attempt 1: LLM as Mayor

The first approach was straightforward: feed Claude the current game state and let it decide what to build.

Early results were rough. With full freedom to place individual zones anywhere on the map, the AI scattered buildings randomly. No road connectivity, unpowered zones, no coherent layout. LLMs aren’t spatial reasoners. Asking one to pick optimal coordinates on a 90x100 grid doesn’t work.

We (meaning me and Shelley) also tried a two-agent system: a “Mayor” for high-level strategy and a “City Planner” for implementation details. This was overcomplicated. The agents disagreed with each other and context got confusing.

Experienced SimCity players have long used the “donut strategy” layout where 3x3 zones are surrounded by roads in 11x11 blocks. Shelley built a BlockBuilder that places these perfect blocks automatically. Now the LLM only has to answer one question: should the next block be residential, commercial, or industrial?

The most fun aspect was the live dashboard where I could watch it build in real-time from my phone.

Watching the livestream after bedtime

Watching the livestream after bedtime

Self-Learning Loop

We added an “Evaluator” agent that ran every 5 completed games. It reviewed recent runs and generated insights that got fed back into the Mayor’s system prompt.

The evolution was fascinating to watch. Early evaluations recommended conservative play:

Maintain minimum $25,000 fund reserve while growing population… Focus on high-impact decisions rather than frequent minor adjustments.

But after some prodding and analyzing more runs, the Evaluator reversed course:

The most successful cities achieved 8,500+ population by making 80+ aggressive decisions over 200 years, while passive strategies with only 8-11 decisions consistently failed to break 8,000 population.

By the end, the advice had completely flipped:

Rapid population growth requires continuous, aggressive zone building with minimal cash reserves. Reinvest ALL profits immediately into city infrastructure.

Results

Over 134 runs, the LLM mayor peaked at 13,060 population. Average was around 4,100. Total API cost using Claude Haiku: about $3.30, or roughly four cents per 200-year game.

Attempt 2: Reinforcement Learning

After 100+ LLM runs with incremental improvements but no real breakthroughs, I asked Shelley how else we might tackle this problem. It suggested reinforcement learning, where an agent learns through trial and error rather than reasoning about the game state. That sounded worth trying.

The first RL attempt hit the same wall the LLM did. Too many possible actions, and the agent learned the wrong lessons. It figured out that placing power plants gave a big immediate reward, so it just spammed power plants and ignored everything else.

The fix was the same insight from the LLM work: give the RL agent the block abstraction. Instead of choosing from thousands of possible tile placements, the agent picks from 90 block locations and three zone types. Same BlockBuilder code handles the details.

Over 666 training episodes, the RL agent peaked at 18,000 population, beating the LLM.

How they compared

| LLM Mayor | RL Mayor | |

|---|---|---|

| Runs | 134 | 666 |

| Best Population | 13,060 | 18,000 |

| Average Population | 4,124 | 6,450 |

| API Cost | $3.30 | $0 |

RL won on raw numbers, but the LLM got to decent results in the first dozen runs. The RL agent needed hundreds of episodes to get there. Both approaches improved dramatically once we constrained the action space and stopped asking the AI to handle spatial reasoning.